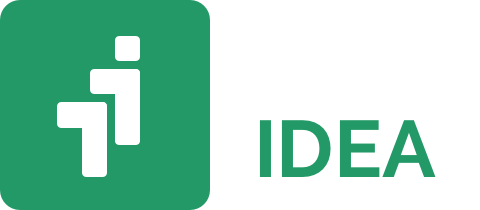

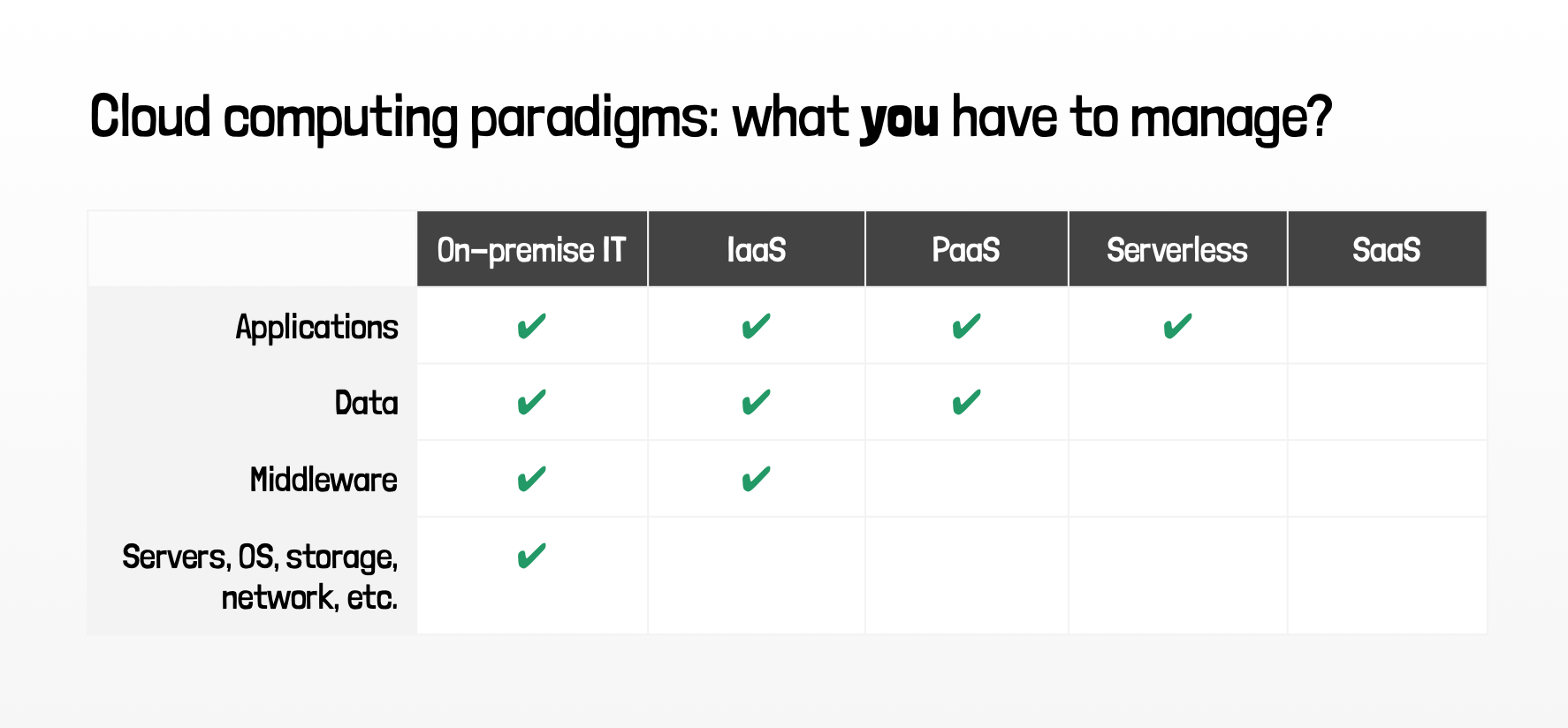

In the second decade of this millennium, cloud computing doesn't need introductions anymore. This on-demand access, via the internet, to computing resources located in remote data centres, has become the new high-quality standard for small and enterprise applications that require high performances, scalability, reliability, cost-efficiency, global reach and agility of development. The reasons for keeping on-premises servers are lowering each year with the rise of secure and pay-as-you-go technologies that allow managing your Infrastructure (IaaS), Platform (PaaS) or Software (SaaS) as-a-Service, "in the cloud" of some CSP (Cloud Service Provider).

Lately, a new kind of cloud computing has risen thanks to its multiple benefits, in a slot between the PaaS and the SaaS offer:

they call it "Serverless".

Don't be deceived by the name: it doesn't actually mean there are no servers running your applications; rather, it's not up to you to manage, interact or even see those servers. Hence, the paradigm let the developers focus on the business logic of their application, i.e. on the activity that really matters.

For a few years, in ITER IDEA, we completely embraced this new cloud philosophy because of the many benefits it brings to our projects and, therefore, to our customers. In a serverless architecture, the components of an app are launched only when needed – triggered by events, API requests, etc. – and terminated right after. Pay-as-you-go prices are therefore very convenient in this model, together with the absence of costs in managing servers, operating systems, networks, etc. Moreover, you are able to design your applications to run for ten users as well as ten thousand, taking advantage of the scalability of the cloud.

Creating a serverless application isn't easy compared to traditional development; cloud-native services require top-notch skills with a rapid turnover in terms of new competencies to learn and assimilate. Some technologies are so fresh that not even universities can't keep up in providing those competencies to their students – with the cloud providers releasing their own courses and certifications. Nevertheless, acquiring and implementing these competencies is worth it and really makes a difference in terms of performance, costs and maintainability of the solutions designed. To give you a reference, when we started switching Papaya – our service used by thousands of students around Europe – to serverless, we had a decrement in costs of 10 times and a visibly more fluid general experience.

So, how is all of this accomplished? Here's an example.

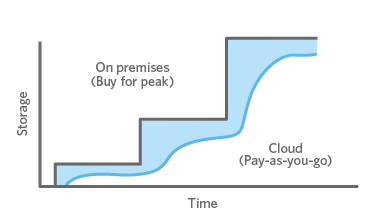

Since Amazon Web Services introduced serverless in 2014 with its AWS Lambda, the cloud scenario evolved rapidly and new exciting serverless architectures started spreading. Let's dive into one of its most simplistic implementations, using AWS services as a reference.

In a typical web application, we have a client (computer, smartphone, etc.) communicating with the back-end architecture through API requests. Amazon API Gateway is the serverless service that acquires, understands and routes the requests; for example, if our app is a to-do list manager, the client may have requested a list of tasks. First of all, we need to ensure that the user is authenticated and authorised to acquire that list of tasks. Amazon Cognito is our service to manage users and permissions (including sign-in and registration flows). After we are permitted, API Gateway invokes the AWS Lambda function that handles the to-do lists; AWS Lambda is the serverless computing service that rises to execute a request (with our business logic) and then shut down after returning the result. Next, our function needs to retrieve data (the to-do list) from the storage. In the serverless world, NoSQL databases are often preferred for their efficiency and ability to scale. AWS offers DynamoDB, a powerful key-value NoSQL storage. The Lambda function has acquired from DynamoDB the data we were looking for, so we can return the result to the client, which can finally display it to the user.

Once again, this is a minimalistic scenario: we have dozens of serverless services we can take advantage of to manage events, queues, push notifications, etc. In practice,

serverless development involves coordinating a series of microservices that talk to each other when needed and shut down when not used,

without the necessity for a central system to stay continuously active for orchestrating the whole flow.

Wow! Is then serverless the panacea of all evils?

As for any other technology, there are some uses cases in which serverless may not be suggested; for example, in case our workflow is stable and predictable so that the resources are continuously used at their 100%. Still, those scenarios are pretty limited, and that is why developers prefer serverless resources more and more.

What about the cloud providers?

In the serverless and, more generally cloud, computing scenario, there are many competitors; we cited Amazon Web Services already, but other important players are Google Cloud Platform and Microsoft Azure. The providers have very similar offers, but AWS is still leading the market (see below) thanks to its vision and execution. That's why most of the services with which we create our applications are on Amazon's cloud; still, as some of the services may be better in one or the other provider, it's good to keep an open mind and stay informed on the daily developments in the exciting cloud universe.

In conclusion, “Serverless” may be a buzzword, but in just a few years has proven its worth to the many IT companies and developers that fell in love with its powerful and convenient tools.

Serverless is the future, and it's here to stay. Embrace it with us!