In this blog post, we propose a series of in-depth studies and practical cases of how AI and Machine Learning algorithms have been crucial in our apps to add new possibilities of digitization, speed-up processes and introduce surprising functionalities to our users.

In this general overview, it's essential to consider the challenges that modern applications face when approaching innovative features and how managed services bring key benefits to the success of these solutions.

As also reported by Forbes in this article, the transition from test to production is highly delicate, and even the best and most accurate models very often fail when they collide with real cases.

The causes are manifold.

First, unlike traditional software applications for which there is a tendency to move on to the next project after the actual release, specialists must remain connected to the AI applications delivered. The nature of these solutions requires, in fact, continuous monitoring of the performance and accuracy of the predictions for subsequent reinforcement learning processes.

Such systems need to remain trained to avoid model deterioration and decrease in accuracy over time. Unfortunately, in many cases, changes in the production data — compared to the information used for training, leads to inevitable failures. For example, the target of an application turns out to be different from the one assumed in the design, making the trained model unusable or ineffective.

The need for these models to be functional in a production environment drives Machine Learning professionals to use managed services and tools. On AWS (Amazon Web Services), this is the case with Amazon Sagemaker.

One of the factors for preferring a managed service is the possibility of accessing pre-trained models with an exponentially more significant number of data collected during the training phase. This process results in models more resilient to the uncertainty of production data.

Let's now see some cases of AI applications within ITER IDEA solutions and how these allow us to overcome new limits.

In a previous blog post, we showed how Scarlett could "listen" and accurately transcribe the intervention reports of mobile technicians through integration with voice interfaces.

Guided by the needs of our customers engaged in interventions abroad, we have also added the ability to speak several languages, using services based on neural networks. Scarlett is, therefore, able to provide precise translations in the language of our partners' clients, who can confirm the intervention report by understanding the details of the activity carried out. Furthermore, reinforcement learning techniques allow the technical dictionary to improve over time, producing increasingly more accurate results.

Today our platform translates over 3000 intervention reports each year.

The desire to amaze our customers and partners by solving real business challenges has pushed us to take further steps in textual content analysis, introducing new Sentiment Analysis features in our solutions. The procedure involves several stages that we simplify below: tokenization of words to divide them into "bags-of-words", elimination of stop-words and terms that do not bring added value and, finally, semantic analysis to categorize the relevant information for the polarization of the sentiment expressed by the sentence within the context.

The integration of this form of intelligence to support commercial agents in their visits allows one to analyze the textual content following the commercial activities and automatically map customer mood and negotiation status based on recent visits.

For a data-driven company, this data results in a significant strategic advantage, because it can be intertwined with other commercial information, such as turnover, number of visits, frequency of appointments, products involved, and other indicators defined by business intelligence tools.

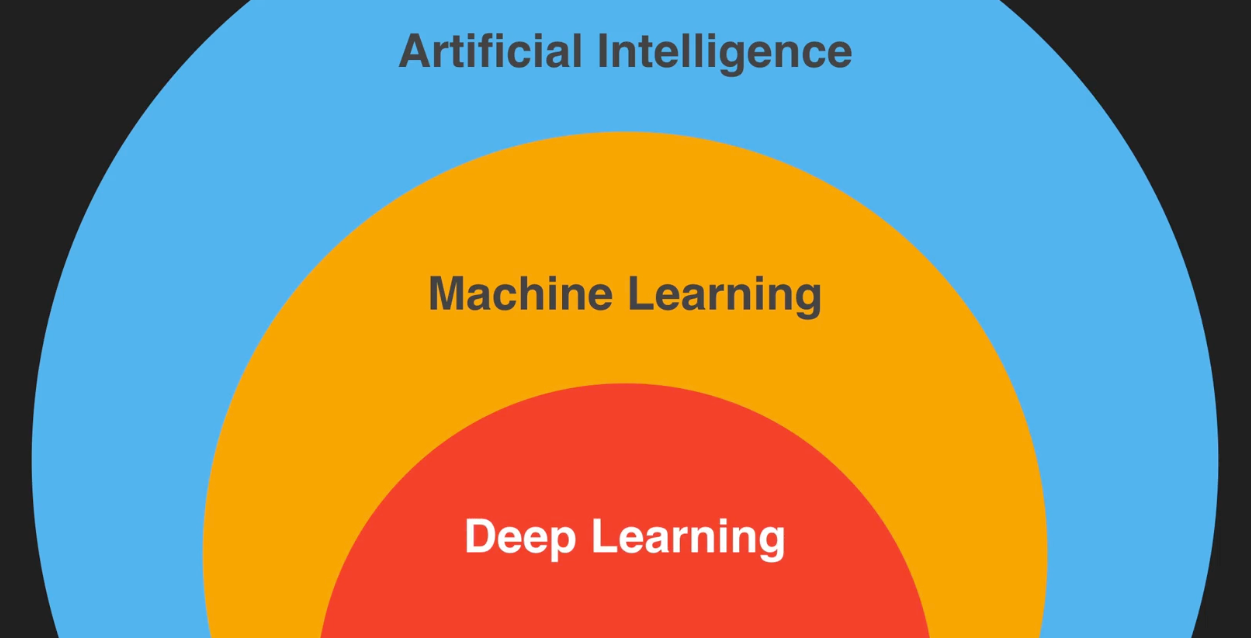

Finally, the last case is exceptionally current for us and concerns the use of Deep Learning algorithms for image analysis.

Deep Learning algorithms used in image recognition typically use CNN (Convolutional Neural Network); these networks can include hundreds and even thousands of layers. They are specially designed (and trained) to provide a sensational speed-up in receiving information from images and recognizing features from the pixels of the figures — for example, the position of objects, automatic moderation of explicit content, etc.

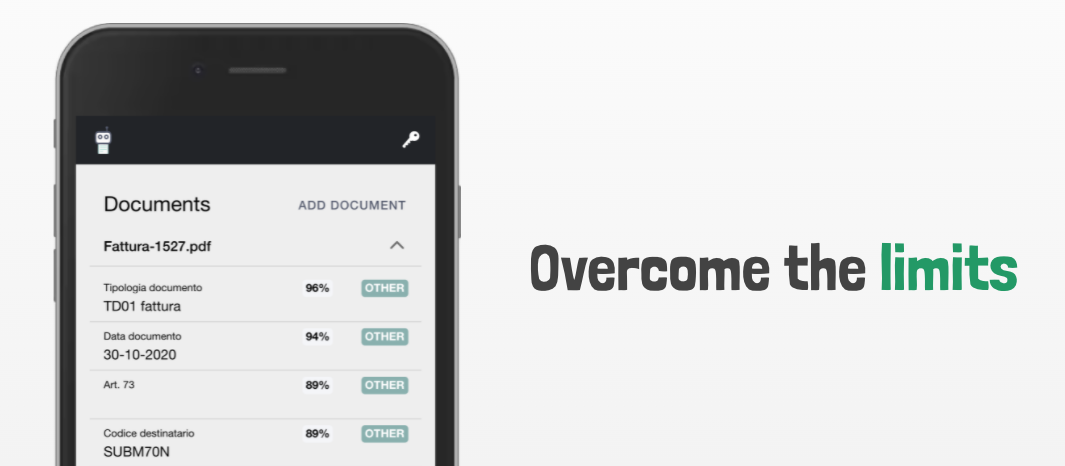

While investigating the potential of these Deep Learning algorithms, we decided to create a first POC (Proof-of-Concept): an application capable of analyzing and understanding paper documents. In addition to extending the capabilities of OCR (Optical Character Recognition) systems, the service allows you to recognize a wide range of documents in a structured way, through solid, resistant and suitably pre-trained models.

In this case, the tagline could only be:

Our take of AI applied to real business cases is linear: it is an extraordinary opportunity to overcome the limitations of previous systems and enable new perspectives.

Thus, the invitation is to investigate the potential of these technologies to equip your business with the tools necessary to stay resilient, overcome current limits and be ready for the challenges of tomorrow.