Thanks to recent developments in the computational field and deep learning algorithms oriented towards the evolution of speech technology, we appreciated the introduction of new forms of interaction between people and computers.

This evolution has seen many different technologies connected together in creating physical voice assistants, i.e. design tools capable of listening and understanding the user's voice, operating consequently. These unique objects take advantage of cloud computing to carry out a series of actions in the world around us, based on what we say or ask them.

Home automation is just an example of how conversational interfaces and speech recognition techniques operate daily. The application fields are countless: logistic systems controlled by voice, chatbots for customer service, support tools for lifecare, or other solutions to improve our lifestyle, at home and work.

In many cases, analysing the natural language makes technology more accessible. Studies conducted by the Amazon Web Services (AWS) team on Alexa (the voice assistant living inside Amazon's devices) shows how

conversational interfaces are evolving more rapidly than previous comparable technologies, such as input devices, keyboards and touchscreens.

Therefore, it is not surprising that many consider natural language interfaces one of the characterizing aspect of the applications of the next future.

Why Scarlett learnt to listen.

A goal of ours is to bring these wonderful technologies into the daily reality, to solve some of the actual perceived needs of Scarlett's users.

Through our partnership with Daniele from AGIC we had the opportunity to collect his feedback.

“These days, in an increasing number of interventions, we are required to use additional precautions, such as latex gloves during maintenance. Gloves do not allow us to use the touch screen of the tablet, and it becomes impossible to collect notes in real-time. Hence, it happens to lose information and useful details while you document the interventions at their conclusion, especially for those which lasts more days."

This is just one of the cases in which we can apply speech technology to support daily scenarios, moreover since users are now used to audio recording tools and similar features from their favourite messaging apps.

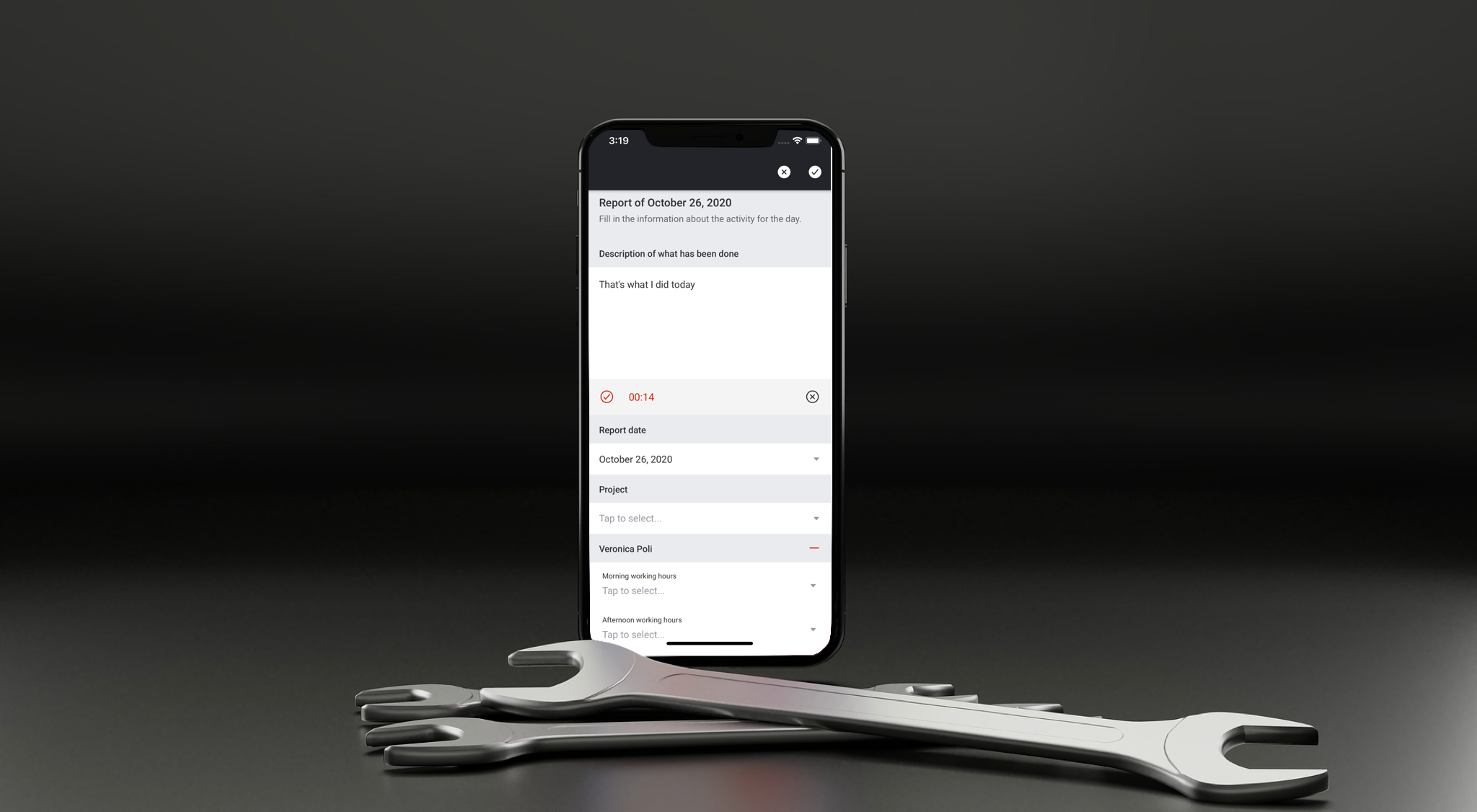

On that account, in a recent update of Scarlett, we enabled a voice interface for listening to the main descriptive fields and report notes, using speech analysis and conversational models, both on iOS and Android.

In only a few weeks of use of the beta version, the functionality helped Daniele and his team to gather a very solid and detailed first draft of their interventions reports. Once the technicians are free from their gloves, they can refine the dictated text, putting it to the customer validation. This way, AGIC reported up to 30% time-saving when creating reports of multi-days activities.

Of course,

this is just a glimpse on what these technologies can enable in Scarlett.

Our final goal is to design a fully conversational interface, to fill in the reports of your activities without touching the device. This will make the service even more accessible and, therefore, impactful on the companies daily routines.

In the meantime, we are ready to collect feedback and impressions of what we introduced so far, in order to improve the feature. Do you have any? Let us know!